Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Major language models often generate plausible but factually incorrect outputs – in other words, they create things. These “hallucinations” can damage reliability in critical information tasks such as medical diagnosis, legal analysis, financial reporting and scientific research.

Retrieval-Augmented Generation (RAG) mitigates this problem by integrating external data sources, which allows LLMs to access information in real time during generation, reducing errors, and, by putting the soil in the actual data, improving contextual accuracy. Implementing RAG effectively requires substantial memory and storage resources, and this is particularly true for large-scale vector and index data. Traditionally, this data has been stored in DRAM, which, while fast, is both expensive and limited in capacity.

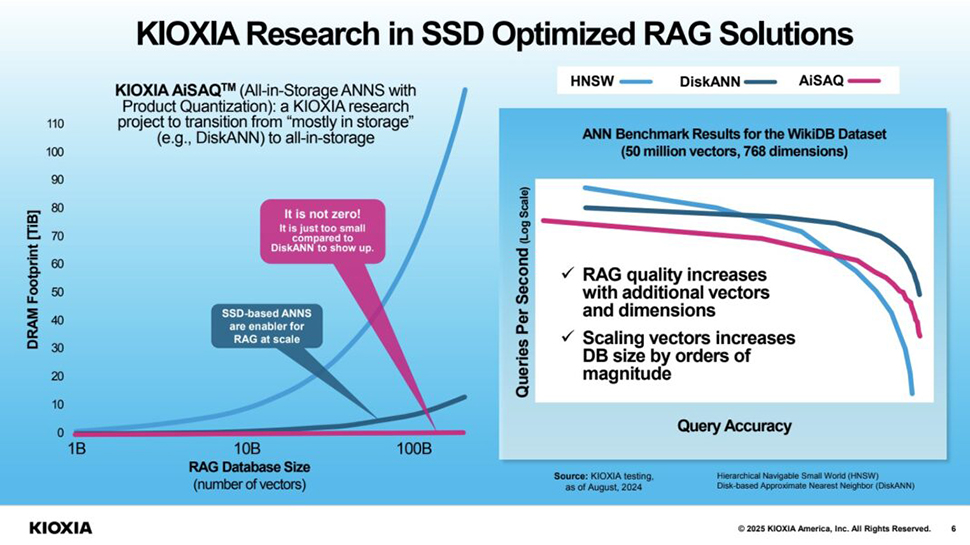

To address these challenges, ServeTheHome reports that at this year’s CES, Japanese memory giant Kioxia introduced AiSAQ – All-in-Storage Approximate Nearest Neighbor Search (ANNS) with Product Quantization – which uses high-capacity SSDs to store vector and index data. Kioxia says that AiSAQ significantly reduces DRAM usage compared to DiskANN, offering a cheaper and more scalable approach to support large AI models.

Moving to SSD-based storage allows the management of larger datasets without the high costs associated with extensive DRAM use.

While accessing data from SSDs may introduce slight latency compared to DRAM, the trade-off includes lower system costs and improved scalability, which can support better model performance and accuracy as larger datasets provide a richer basis for learning and inference.

Using high-capacity SSDs, AiSAQ meets RAG’s storage demands while contributing to the broader goal of making advanced AI technologies more accessible and affordable. Kioxia has not revealed when it plans to bring AiSAQ to market, but its safe bet rivals like Micron and SK Hynix will have something similar in the works.

ServeTheHome concludes, “Everything is AI these days, and Kioxia is also pushing this. Realistically, RAG will be an important part of many applications, and if there is an application that needs to access a lot of data, but is not used as ‘and often, this would be a great opportunity for something like Kioxia AiSAQ”.