Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Nvidia has become the undisputed leader in AI hardware, thanks to its powerful GPUs, and its market value has grown in 2024, briefly making the the most valuable company in the worldsurpassing even Apple and Microsoft, and by December 2024, its market capitalization is about $3.3 trillion.

But despite this apparent dominance, Nvidia faces stiff competition when it comes to AI. AMDhis main rival, continues to challenge, and Intel and Broadcom are also investing heavily to capture a larger share of this fast-growing industry.

In addition to these long-established players, several startups are making significant strides in AI hardware, including Tenstorrent, Groq, Celestial AI, Enfabrica, SambaNova, and Hailo – so we’ve rounded up the top 10 startups we believe can’t. not only challenging the dominance of established companies, but also pushing the limits of what AI hardware can achieve, positioning them as key innovators to watch in 2025.

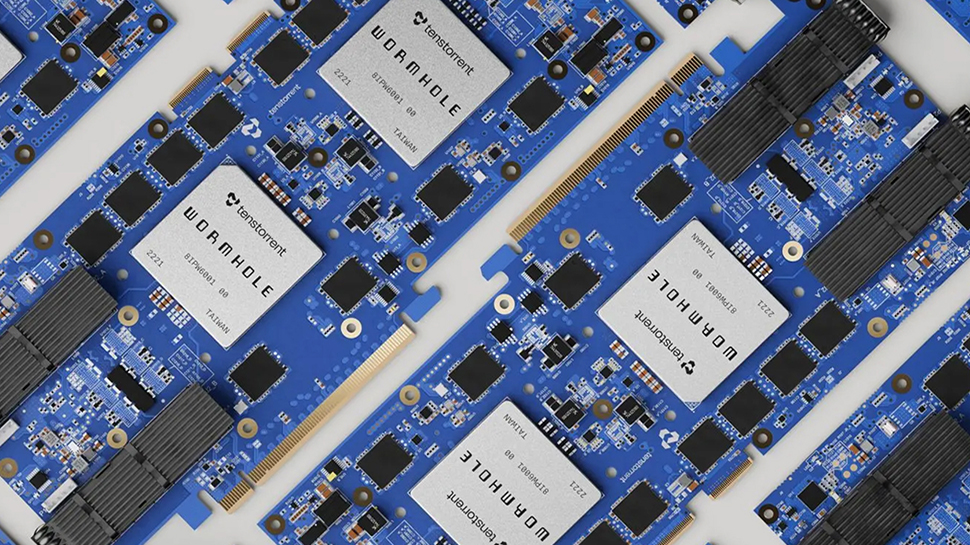

Tensorrentled by CEO Jim Keller — best known for his work on AMD’s Zen architecture and Tesla’s original self-driving chip — focuses on developing AI processors designed to boost performance and efficiency across loads of training and inference work.

By combining its Tensix cores with open-source software stacks, Tenstorrent offers an alternative to the cost HBM favored by competitors such as Nvidia. The company also licenses AI and RISC-V intellectual property for customers looking for customizable silicon solutions. We wrote about it Grayskull (entry-level AI hardware for developers) and Wormhole (AI in network) the products first.

Tenstorrent is an obvious choice as one of our top 10 AI companies to watch in 2025, as it recently closed a $700 million Series D funding round led by Samsung Securities with additional investments from LG Electronics, Fidelity, and Bezos Expeditions – the venture capital firm launched by ex Amazon CEO Jeff Bezos – values the startup at $2.6 billion.

Mythical is a startup specializing in analog AI chips that provides energy-efficient solutions for AI inference, especially in cutting-edge applications such as IoT, robotics, and consumer devices. Despite running out of capital in 2022, Mythic repaid with timely additional funding. Its next-generation processor features a new software toolkit and simplified architecture, making analog computing more accessible.

Headquartered in Austin, Texas, and Silicon Valley, and led by Dr. Taner Ozcelik, former VP & GM of Nvidia’s automotive division, Mythic’s analog compute-in-memory technology is said to enable chips that are 10 times more affordable , consumes 3.8 times less power, and performs 2.6 times faster compared to industry standard digital AI inference CPUs.

Its Analog Matrix Processors (AMP) integrate flash memory with analog computing to enable low-power, high-performance AI inference. Each AMP tile includes Mythic ACE, which combines flash memory, analog-to-digital converters (ADCs), a digital subsystem with a 32-bit RISC-V processor, a SIMD vector engine, and a high-performance network-on-chip . (NoC). This design allows Mythic’s chips to deliver up to 25 TOPS of AI performance while minimizing power consumption and thermal challenges.

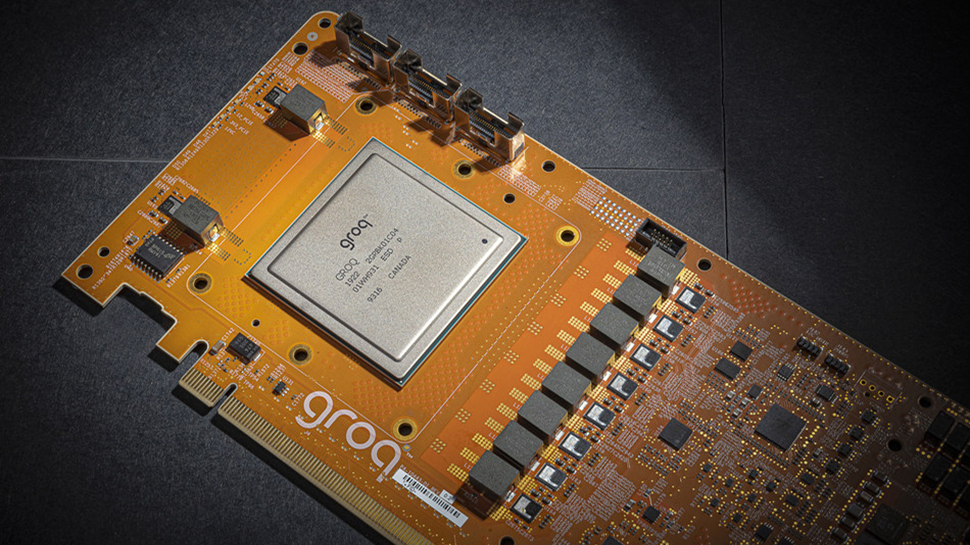

Grok (not to be confused with Grok, Elon Musk’s AI chatbot) is led by ex-Google engineer Jonathan Ross who first designed Google’s tensor processing unit (TPU). The startup develops tensor streaming processors (TSPs) that are optimized for AI workloads in data centers.

The company started life with the idea of a software-first approach to AI hardware design and this led to the creation of its Language Processing Unit (LPU), which provides super-fast speeds for the AI applications. Groq’s first public demo was a ultra-fast Q&A engine which generated AI responses with hundreds of words in less than a second.

The company currently offers GroqCloud, which enables developers and enterprises to rapidly build AI applications, and GroqRack, which provides various interconnected rack configurations for on-site deployment.

You can try Groq and get an idea of its speed GroqChat.

Bluminda Canadian analog AI chip startup established in 2020, focuses on creating high-performance, low-power AI solutions for cutting-edge computing applications in areas such as wearables, smart home devices, industrial automation and mobility.

Based on standard CMOS technology, Blumind’s approach enables always-on AI processing with minimal power consumption. The startup’s first product, an analog keyword spotting chip, is set for volume production in 2025 and will be available as a standalone chip and a chiplet that integrates into microcontroller units.

Looking to the future, Blumind says it hopes to scale its analog architecture and create vision CNNs and ultimately gigabit-sized language models (SLMs).

Light matter seems to be leading the way in photonic computing. Based in Boston, the startup develops AI hardware that uses light for faster, more energy-efficient data processing. Its CEO, Nicholas Harris, PhD, has more than 30 patents and 70 journal publications, with a focus on quantum and classical information processing with integrated photonics.

Lightmatter’s first product, the Passage 3D Silicon Photonics Engine, is described as the world’s fastest interconnect, supporting configurations from single-chip to wafer-scale systems.

The startup’s next-generation AI computing platform, Envise, integrates photonics and electronics in a compact design. It supports standard deep learning frameworks and model formats, offering a complete set of tools, including a compiler and runtime, to provide high inference speed and accuracy.

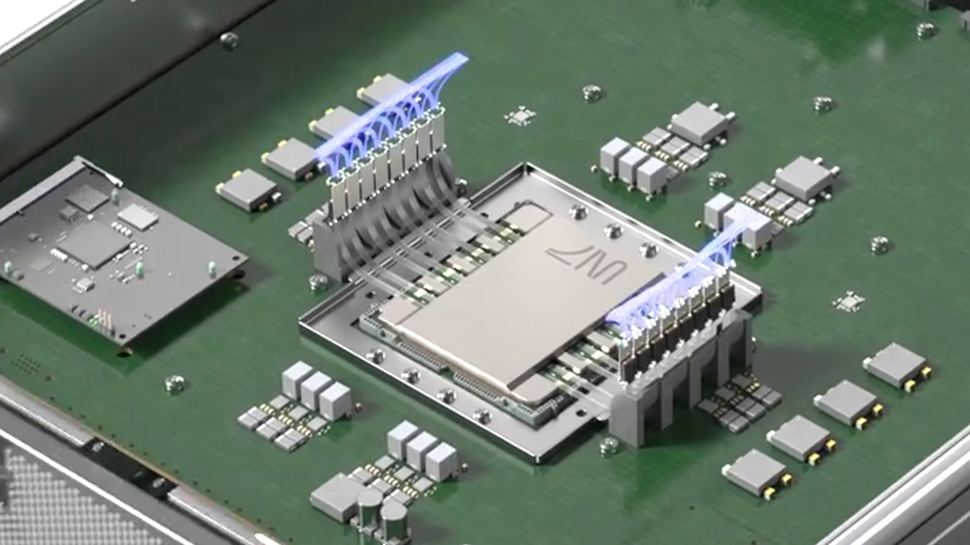

Untether AIfounded in 2018 and based in Toronto, Canada, specializes in the development of high-performance AI chips designed to accelerate AI inference workloads. Its “in-memory” computing architecture minimizes data movement by placing processing elements adjacent to memory, significantly increasing efficiency and performance.

For OEMs and on-prem data centers, we offer a selection of AI accelerator cards in the PCI-Express form factor, including the 75-watt AI240 Slim Accelerator Card AI240 Slim Accelerator Card featuring the new speed generation AI240 IC for high. performance and reduction of energy consumption.

Untether AI’s Inference Accelerator IC includes the speedAI240 which has more than 1,400 custom RISC-V processors in its unique in-memory architecture. These devices are specifically designed for AI inference workloads and can deliver up to 2 PetaFlops of inference performance and up to 20 TeraFlops per watt.

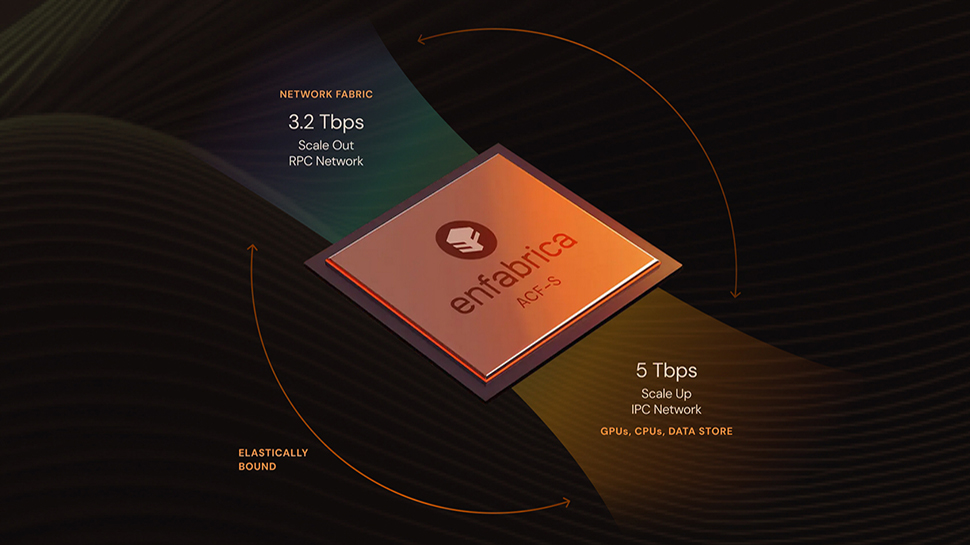

Factory wants to revolutionize the web for the era of Gen AI. The Mountain View, Calif.-based startup produces Accelerated Compute Fabric SuperNIC (ACF-S) silicon and system-level solutions designed from the ground up to efficiently connect and move data across all endpoints in modern data center infrastructure of AI data.

Under the leadership of Rochan Sankar, former Senior Director at Broadcom, Enfabrica announced the release of the “world’s fastest” GPU Network Interface Controller chip in November 2024. ACF SuperNICdesigned with high root and high bandwidth capabilities, it delivers an impressive 3.2 Tbps bandwidth per accelerator and will be available in limited quantities starting in Q1 2025.

With 800, 400 and 100 Gigabit Ethernet interfaces, 32 high-radix network ports, and 160 PCIe lanes on a single ACF-S chip, this technology allows building AI clusters with more than 500,000 GPUs. The design uses a two-tier network to provide high scale throughput and end-to-end latency across all GPUs in the cluster.

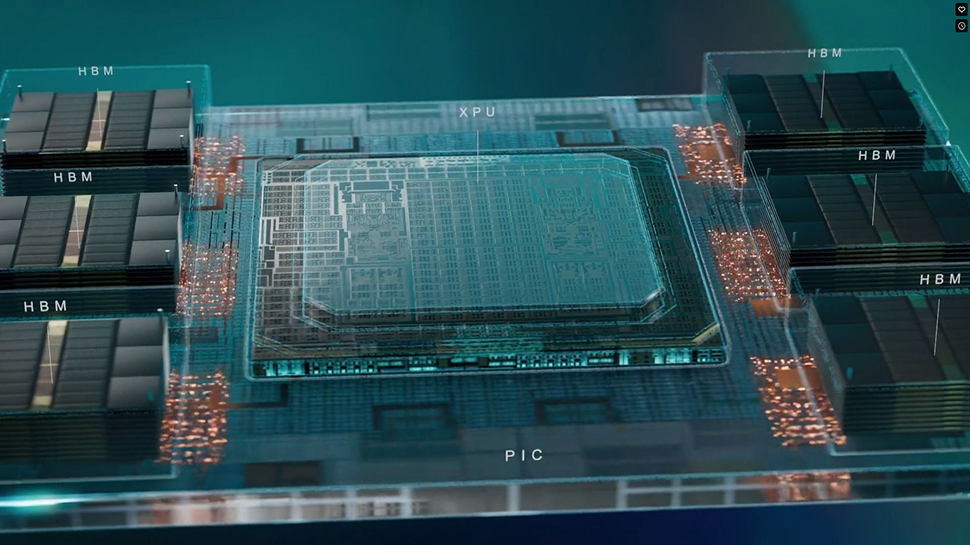

The celestial AIbased in Santa Clara, California, is one of many startups working to redefine optical interconnects. Its Photonic Fabric technology is designed to disaggregate AI compute from memory, offering what the company describes as a “transformative leap in AI system performance” that is ten years ahead of existing technologies.

Celestial AI claims its Photonic Fabric is the industry’s only capable solution breaking down the wall of memoryand provide data directly to the computing location. It supports current HBM3E and upcoming HBM4 bandwidth and latency requirements while maintaining ultra-low power consumption in the single-digit pJ/bit range.

It’s not just us who think AI Celestial should be on your radar for 2025, as the company was deservedly recognized with the “Start-Up to Watch” award in 2025. GSA Awards Celebration in December 2024.

SambaNovaled by co-founder and CEO Rodrigo Liang, who was previously responsible for SPARC Processor and ASIC Development at Oracle, is a Softbank-funded company that builds reconfigurable data flow units (RDUs). SambaNova describes its RDUs as “him GPU Alternative” to accelerate AI training and inference, especially in enterprise and cloud environments.

Powered by the SambaNova Suite, Samba-1 was the startup first generative AI model of trillions of parameters. It uses a Composition of Experts (CoE) architecture that aggregates several smaller “expert” models into a single, larger solution.

For Generative AI development, there is SambaNova DataScale, which is said to be the “world’s fastest hardware platform for AI”, powered by SN40L RDU, which has a three-level memory architecture and a single system node which supports up to 5 trillion parameters.

SambaNova was named the “Most Respected Private Semiconductor Company” at the 2024 GSA Awards Celebration, recognizing its industry-leading products, visionary approach and promising future opportunities. (Tenstorrent was a runner-up in the same category).

Hibased in Tel Aviv, Israel, develops what it says are the world’s best cutting-edge AI processors, uniquely designed for high-performance deep learning applications on cutting-edge devices.

Hailo co-processors, including Hailo-8 and Hailo-10H, integrate with leading platforms and support a wide range of neural networks, vision transformer models and LLM. In addition to those, the company offers the Hailo-15 AI Vision Processor (VPU), which is an AI-centric camera SoC that provides high-performance AI processing for functions such as image enhancement, AI-driven video pipelines and advanced analytics. .

Applications for Hailo’s processors include automotive, security, industrial automation, retail, and personal computing. The startup also announced several partnerships for its processors, including with the Raspberry Pi Foundation to provide AI accelerators for the Raspberry Pi AI Kit, an add-on for the Raspberry Pi 5.